An important part of this project was data extraction. The Swiss Institute of Snow and Avalanches kept a very good record of all the published avalanche and weather reports. Most of it were shared on paper (pdf or gif) 15 years ago and we decided to extract the information out of it to make our analysis/vizualisation possible. The following article goes through our extraction procedure, you can browse through the results in EXPLORE.

1. Data scraping

Our dataset consists of information retrieved from SLF archives. We asked the managers to access their database but they couldn’t help us. No problem, we scraped hard core for one night.

Several filters were used to select the folders we wished to extract:

- language: files are often duplicated for the 4 languages (de, fr, it, en). When it is the case we download only one set in the following order of preference: en, fr, de. German is the default language (always present).

- too specific: some files are not interesting for now (too specific or too regional). We don’t download the snow profiles and the regional snow report.

- color or black and white: maps are available in color and in black and white. Colors are easier than textures for computer vision algorithms, so we drop the black and white maps.

Now we can use the python script ../tools/download.py to fetch the ~30’000 files in the directory structure.

python3 tools/download.py data/file_to_download. ./data/slf --prefix https://www.slf.ch/fileadmin/user_upload/import/lwdarchiv/public/ --nproc 4

We got approximately 5GB of data that we stored on a S3 bucket s3://ada-avalanches. Let us know if you want to have access. It has the same hierarchy as SLF archives

2. Map extraction

The most challenging part of the data extraction was retrieving the necessary information from images provided by the website SLF archives. Here are some sample maps, we had more than 10’000 of them, so we had to automatize the process ;)

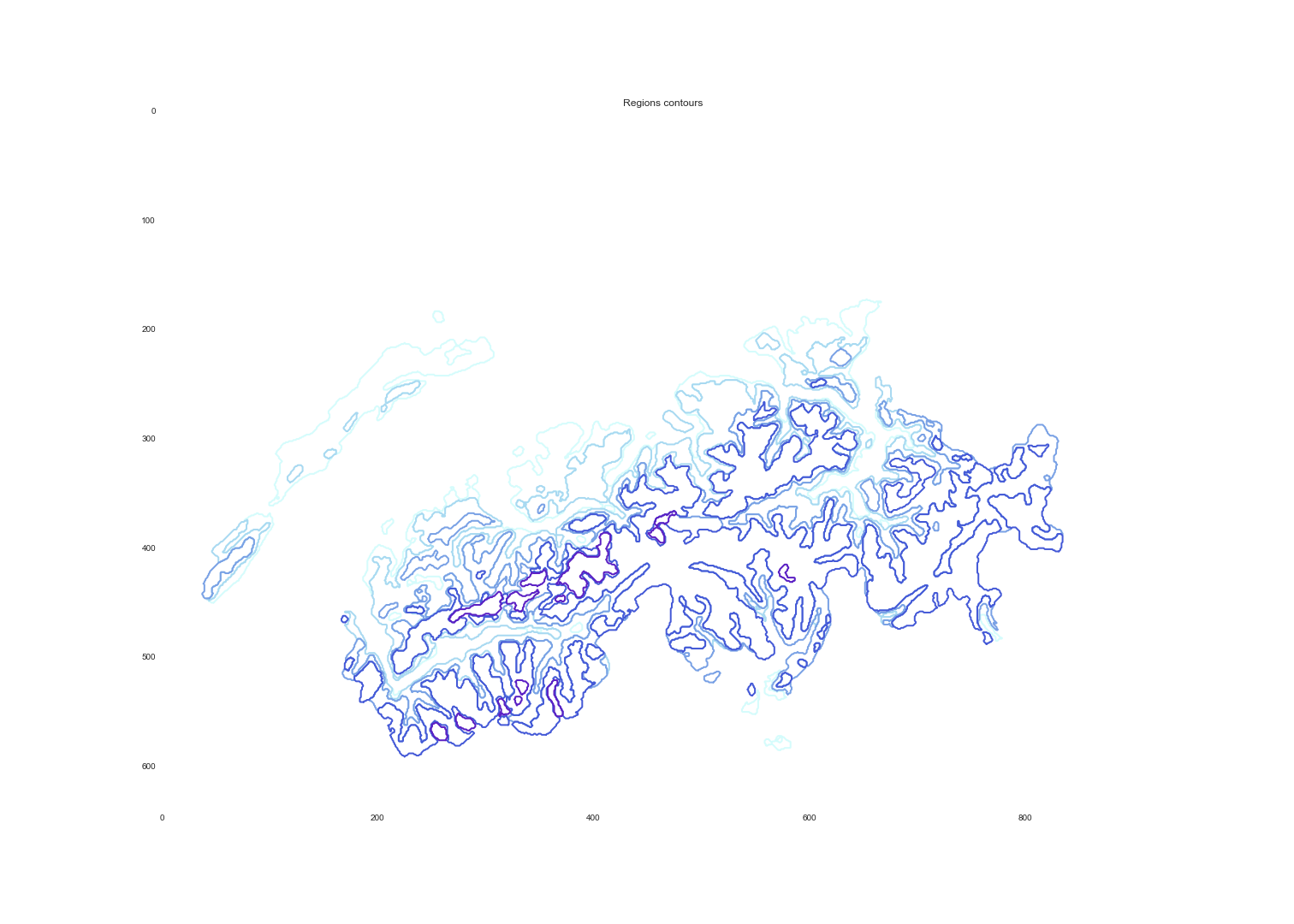

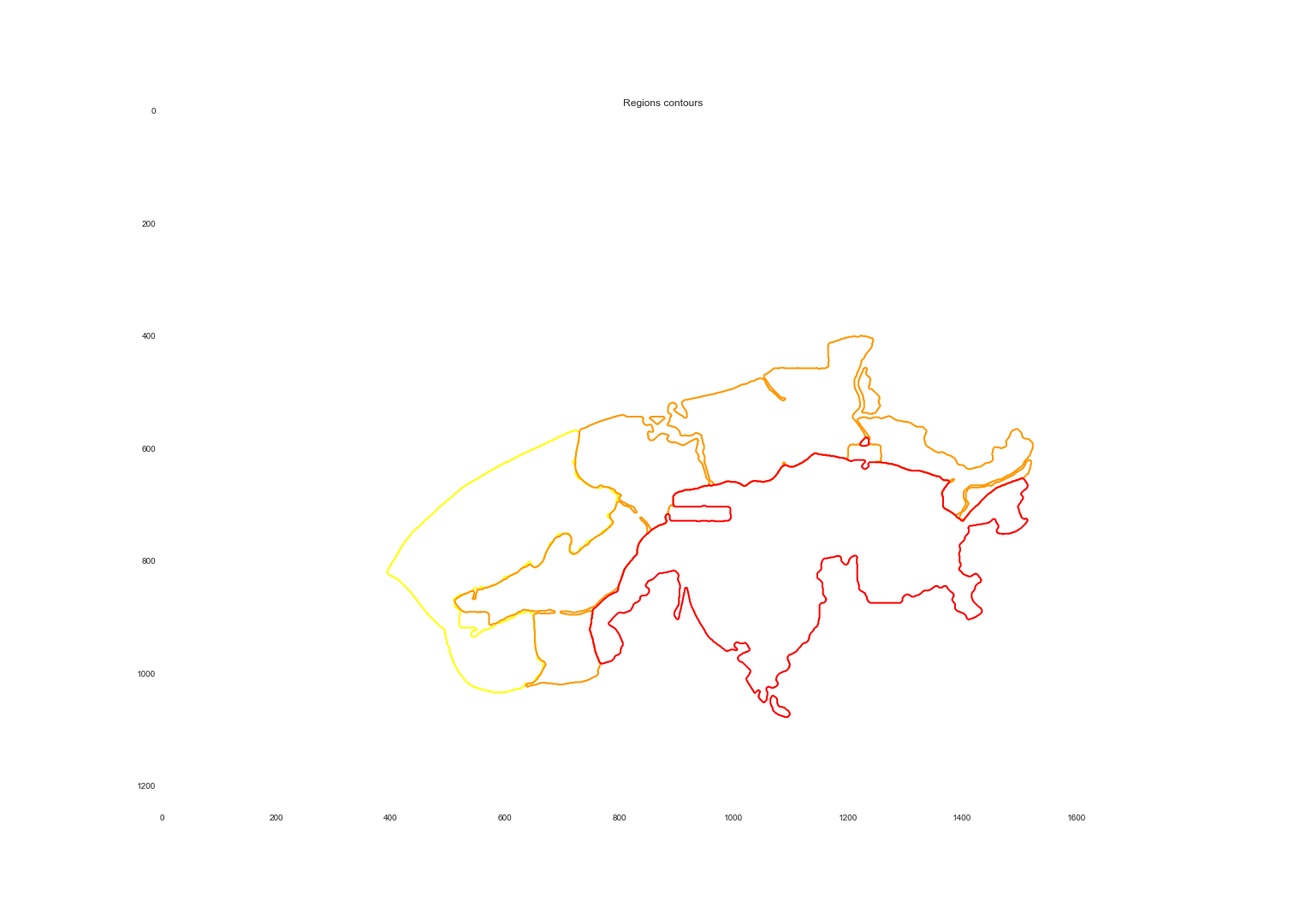

We developped a handful of methods to extracts the snow and danger regions from the color maps.

- grey removal: looking at standard deviation of color channels for each pixel, we could threshold the greys and remove them from the original image,

- color projection: due to the noise in the image or minor differences in the color tones, we had to project each pixel’s color to the closest color in the reference key (with Euclidean distance).

- mask clipping: many images had different sizes or were centered differently. We created binary masks to remove the legend, the title and sometimes extra logos or noise.

- smoothing: to remove small imperfections or noisy color projections, we used a median filter in order to get smoother regions and ease the task of contour detection.

- region detection: using color detection we extracted the contour of each region.

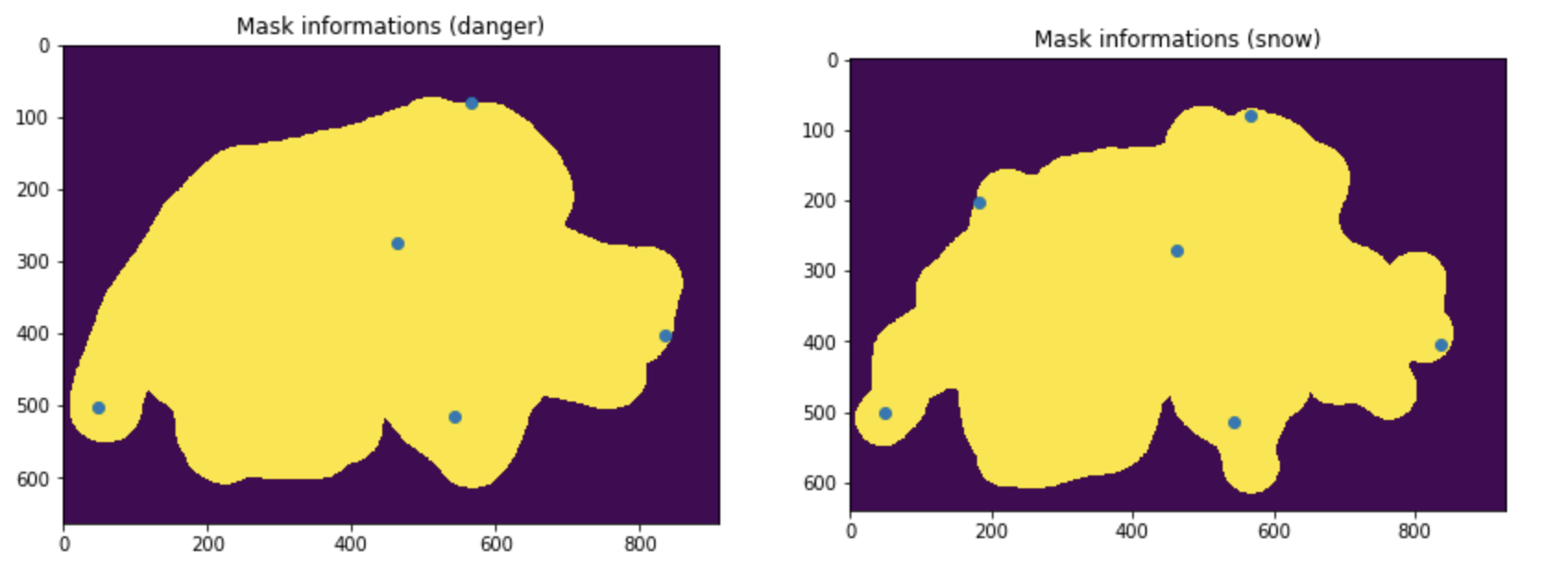

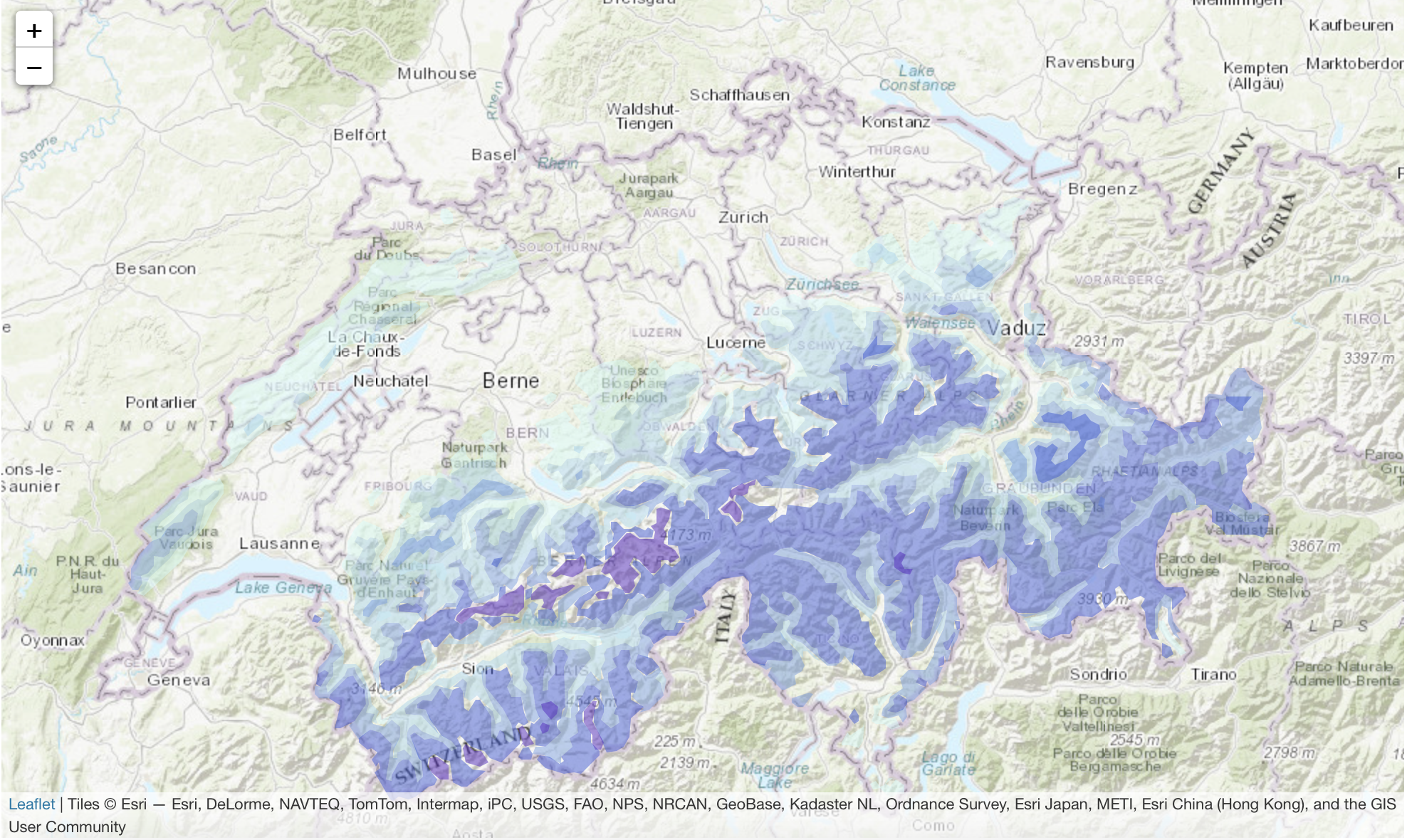

- pixel to geo location projection: once we had contours of the regions in the image (by pixels) we had to transform those into geolocated regions. To do so, we learned a mapping from pixel to geolocations. We took 6 points of references on the image and on Google maps (blue dots above). Note that 3 would have been enough to constraint the problem, but with the least square solver we could average out our small mistakes at picking pixel location of the landmarks.

- GeoJSON creation and website: to visualize the regions, we transformed them into GeoJSON, smoothed these polygons and displayed them as interactive map overlays.

By running the python scripts ../tools/map_extractor.py and ../tools/map_extractor_adapted2snow.py we could automatize the extraction of maps, which ran for more than 30 hours!

python3 tools/map_extractor.py data/slf/ data/map-masks/ json-maps/

More than 10’000 maps were extracted and converted into JSON files which we further used for analysis and visualisation. Each of the JSON files has several features, in both danger and snow related maps we assigned a date and a URL so that the user can compare the results obtained with the raw data.

3. Avalanches accidents

The avalanche accidents were downloaded from the SLF avalanche accidents website with precise coordinates for each accident. From this dataset, we obtained 350 accidents that happened over the last 20 years in Switzerland. For each one of them, we have the date, the location, the danger level that was announced and the number of people that were caught, buried and killed. We built a map showing accidents depending on the risk level to visualise the data points which you can find in notebooks/accidents.ipynb. You will find the result of that mapping under EXPLORE.